That Rugged Raw

It has been a more-busy-than-usual week on campus, and I've had a pretty packed conference schedule. I've been much too far behind on New Year's Resolution:

Ship Copy.

So, in it's most-purest most-rawest most-honest form, you can find a number of raw transcripts in the new repo: decause/raw

So, What does that look like?

.

├── libreplanet

│ └── 2015

│ ├── closingkeynote-libreplanet-karensandler.txt

│ ├── debnicholson-friday.txt

│ ├── freesoftwareawards.txt

│ └── highpriorityprojs-libreplanet-friday.txt

├── pycon

│ └── 2015

│ ├── ninapresofeedback.txt

│ ├── pycon-day2-keynotes.txt

│ ├── pyconedusummit2015.txt

│ └── scherer-pycon-ansible-day2.txt

└── RIT

└── 2015

├── biella-astra-raw.txt

├── biella-molly-guest-lecture.txt

└── molly-sauter-where-is-the-digital-street.txt

6 directories, 13 files

$ wc -w libreplanet/2015/*.txt

2287 libreplanet/2015/closingkeynote-libreplanet-karensandler.txt

139 libreplanet/2015/debnicholson-friday.txt

586 libreplanet/2015/freesoftwareawards.txt

2297 libreplanet/2015/highpriorityprojs-libreplanet-friday.txt

5309 total

$ wc -w pycon/2015/*.txt

106 pycon/2015/ninapresofeedback.txt

2233 pycon/2015/pycon-day2-keynotes.txt

1844 pycon/2015/pyconedusummit2015.txt

63 pycon/2015/scherer-pycon-ansible-day2.txt

4246 total

$ wc -w RIT/2015/*.txt

4521 RIT/2015/biella-astra-raw.txt

2489 RIT/2015/biella-molly-guest-lecture.txt

1964 RIT/2015/molly-sauter-where-is-the-digital-street.txt

8974 total

18529 total total

18,529 words, or, just over 41 pages total of raw text.

There is a flaw in my workflow. Though there is some utility in a raw transcript, really it is mostly when delivered in real-time. After the fact, there is much post-production work to be done, like spell checking. Even after, if there is a video, then the transcript is partial, and incomplete. This is bothersome to many potential downstream consumers of raw text. So where does that leave us?

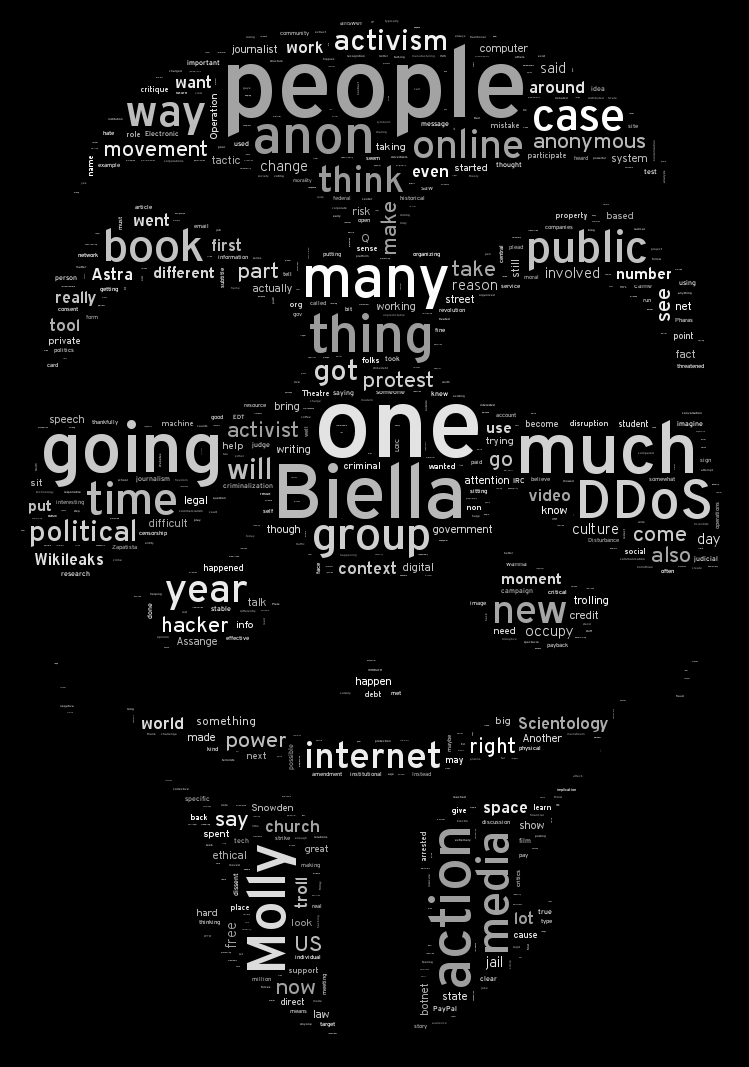

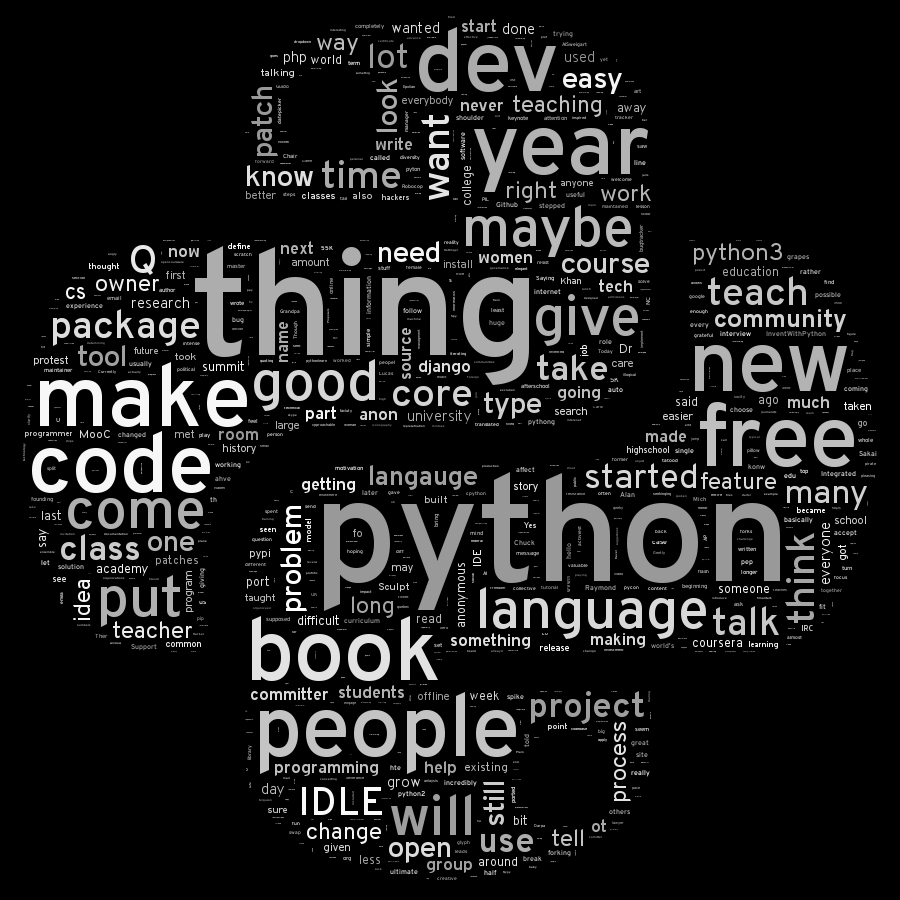

Word Clouds

I've played with word_cloud before within my decause/presignaug for building

presidential inauguration visualizations last year. Since then, word_cloud has

gotten much more sophisticated--now using scikitlearn, and numpy, and providing the ability to fit word clouds within images!

List of Issues/Fixes

- you'll need to

pip install cythonfirst You'll need tosudo yum install freetype-devel(probably not necessary, since this is alleviated by pointing at a diff.ttftypeface...)- you'll have to edit your

FONT_PATHwithin word_cloud.py - image masks *must* be saved as greyscale, not rgb images (this was a biggie, and I wouldn't have figured it out if

GIMPdidn't display the color encoding in the file statusbar when you opened things :) )

pycon.py and pycon-greys.py.